In this tutorial, we will be building a Non-Expo React Native application to recognize landmarks from images using Firebase's machine learning kit.

Firebase

Firebase is a platform developed by Google for creating mobile and web applications. It was originally an independent company founded in 2011. In 2014, Google acquired the platform, and it is now their flagship offering for app development. Wikipedia

Firebase's ML Kit is a mobile SDK that brings Google's machine learning expertise to Android and iOS apps. There's no need to have deep knowledge of neural networks or model optimization to get started with the ML kit. On the other hand, if you are an experienced ML developer, it provides APIs that help you use custom TensorFlow Lite models in your mobile apps. Firebase ML Docs.

Prerequisites

To proceed with this tutorial:

You'll need a basic knowledge of React & React Native.

You'll need a Firebase project with the Blaze plan enabled to access the Cloud Vision APIs.

Overview

We'll be going through these steps in this article:

- Development environment.

- Installing dependencies.

- Setting up the Firebase project.

- Setting up Cloud Vision API.

- Building the UI.

- Adding media picker.

- Recognize Landmarks from Images.

- Additional Configurations.

- Recap.

You can take a look at the final code in this GitHub Repository.

Development environment

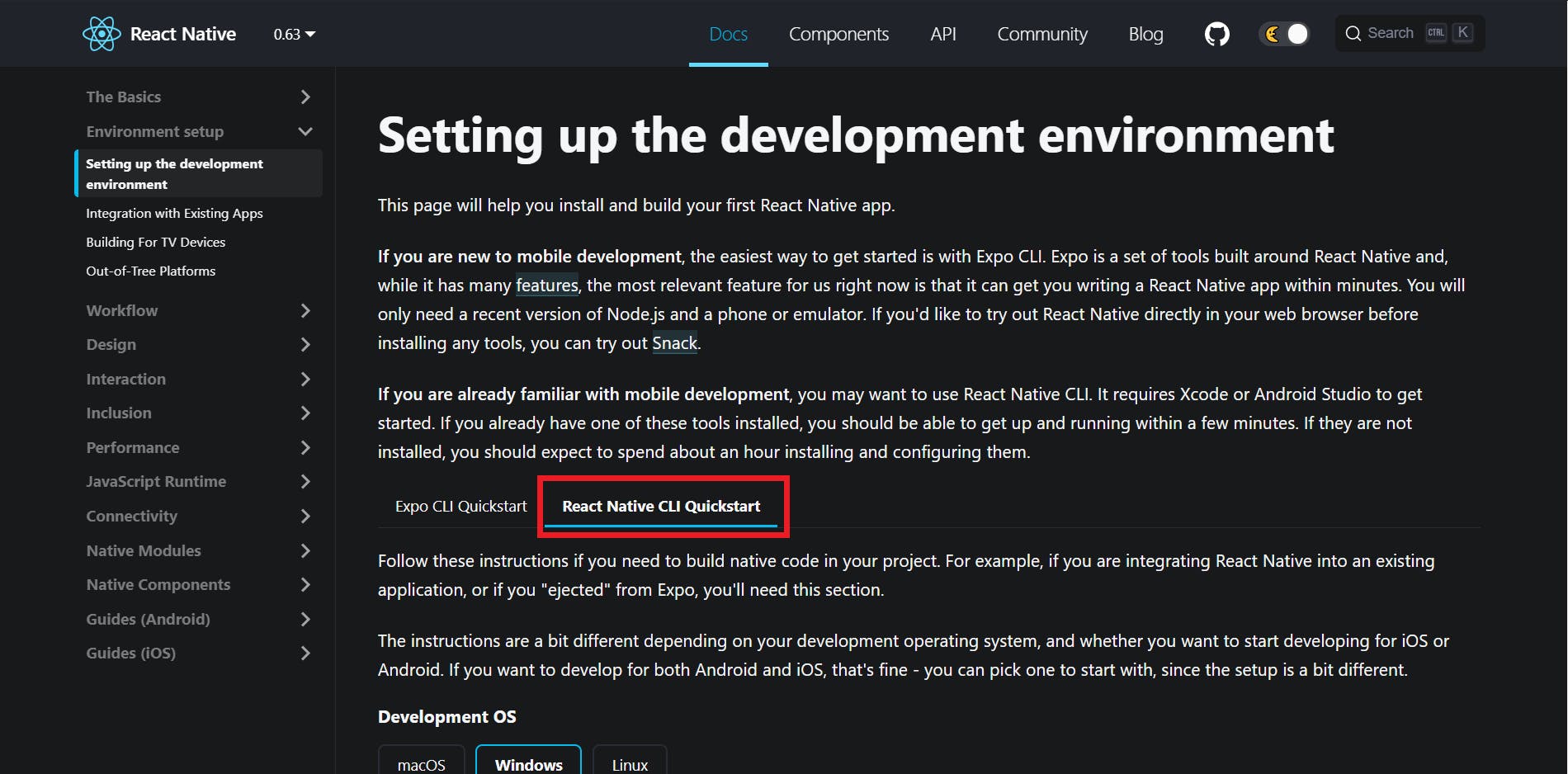

IMPORTANT - We will not be using Expo in our project.

You can follow this documentation to set up the environment and create a new React app.

Ensure you're following the React Native CLI Quickstart, not the Expo CLI Quickstart.

Installing dependencies

You can install these packages in advance or while going through the article.

"@react-native-firebase/app": "^10.4.0",

"@react-native-firebase/ml": "^10.4.0",

"react": "16.13.1",

"react-native": "0.63.4",

"react-native-image-picker": "^3.1.3"

To install a dependency, run:

npm i --save <package-name>

After installing the packages, for iOS, go into your ios/ directory, and run:

pod install

IMPORTANT FOR ANDROID

As you add more native dependencies to your project, it may bump you over the 64k method limit on the Android build system. Once you reach this limit, you will start to see the following error while building your Android application.

Execution failed for task ':app:mergeDexDebug'.Use this documentation to enable multidexing. To learn more about multidex, view the official Android documentation.

Setting up the Firebase project

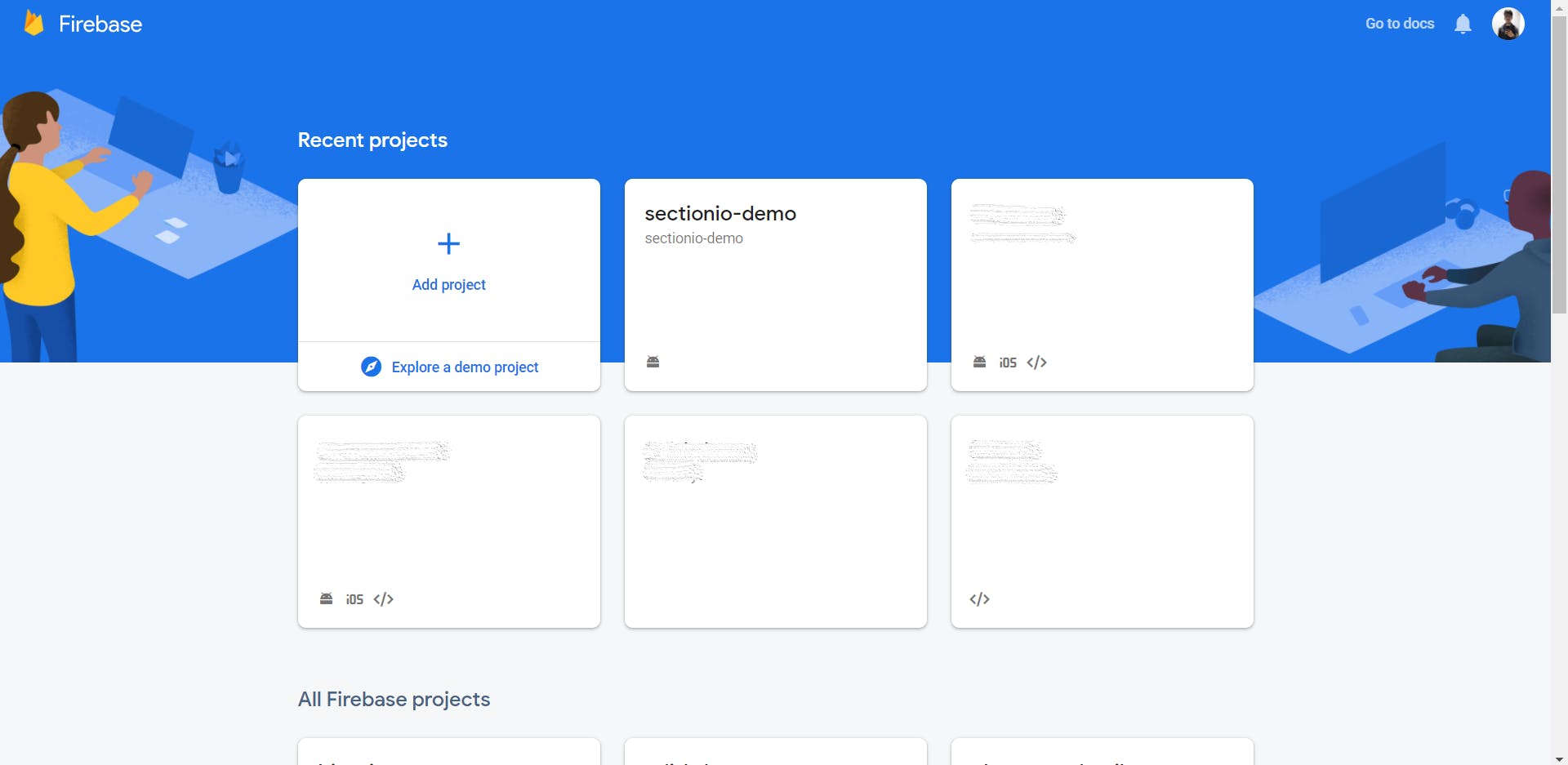

Head to the Firebase console and sign in to your account.

Create a new project.

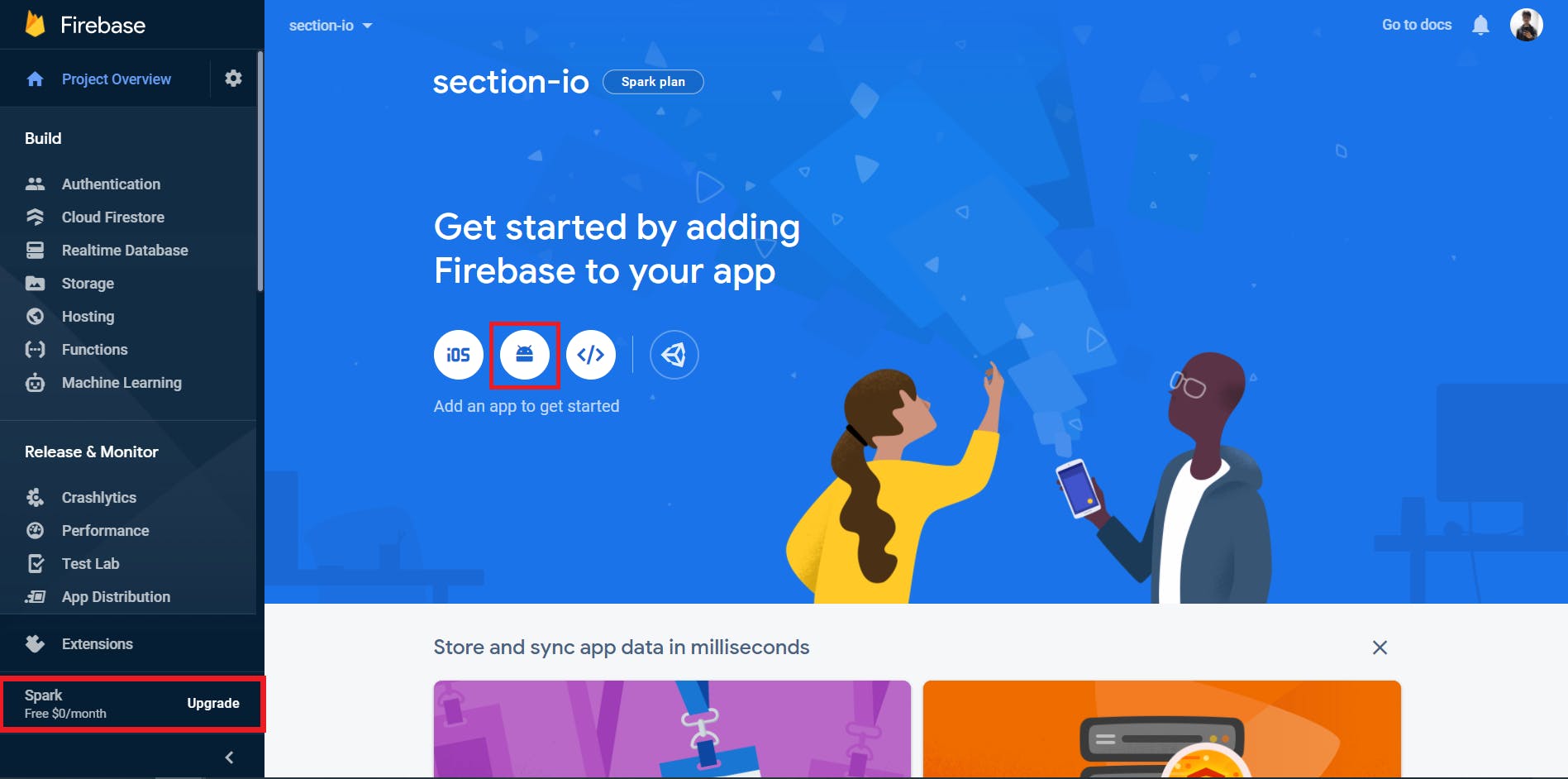

Once you create a new project, you'll see the dashboard. Upgrade your project to the Blaze plan.

Now, click on the Android icon to add an android app to the Firebase project.

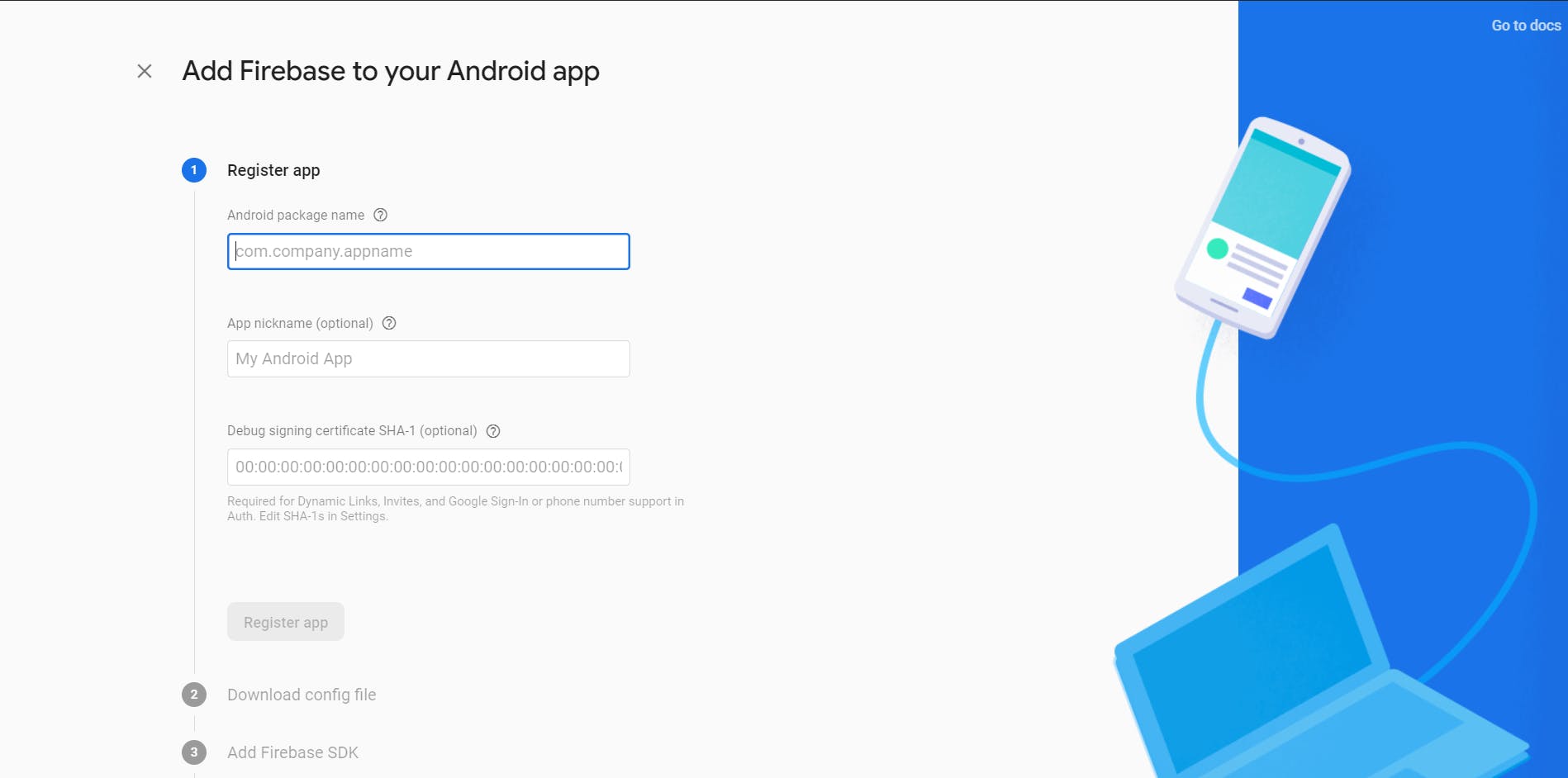

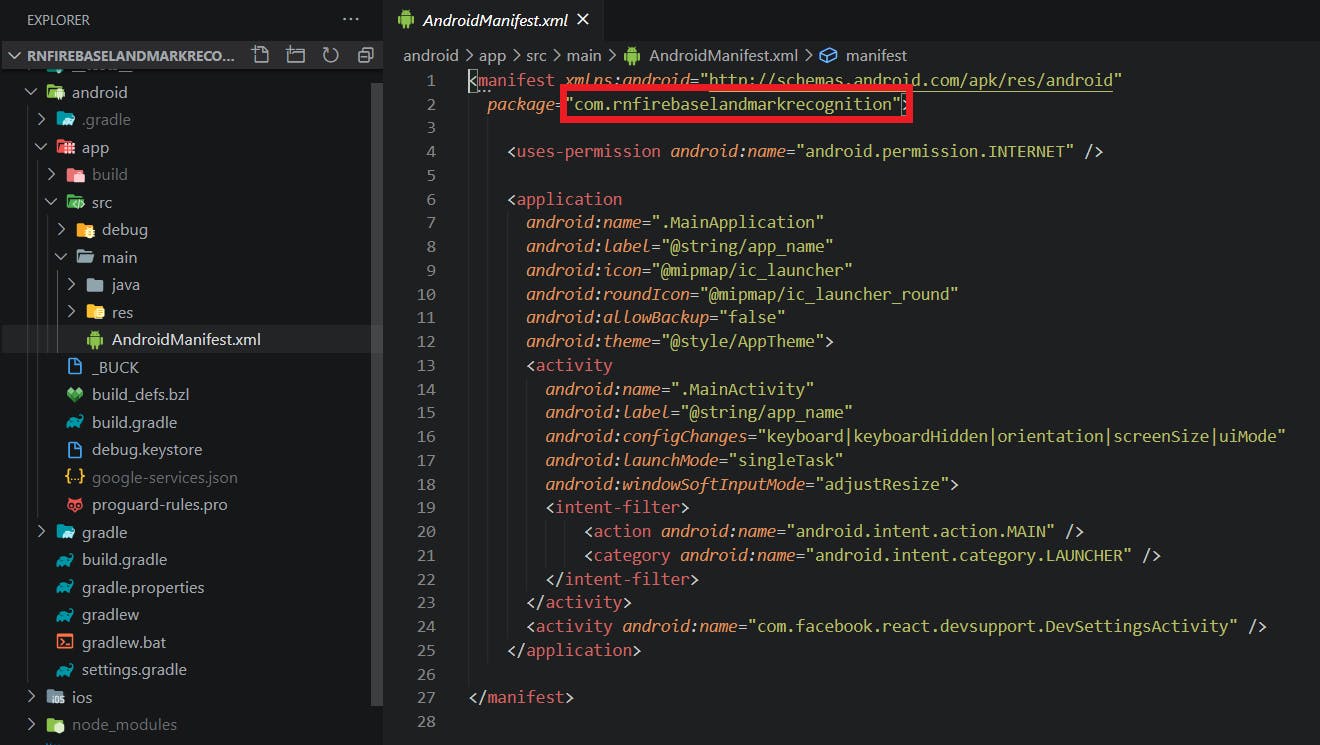

You will need the package name of the application to register application. You can find the package name in the AndroidManifest.xml file which is located in android/app/src/main/.

Once you enter the package name and proceed to the next step, you can download the google-services.json file. You should place this file in the android/app directory.

After adding the file, proceed to the next step. It will ask you to add some configurations to the build.gradle files.

First, add the google-services plugin as a dependency inside of your android/build.gradle file:

buildscript {

dependencies {

// ... other dependencies

classpath 'com.google.gms:google-services:4.3.3'

}

}

Then, execute the plugin by adding the following to your android/app/build.gradle file:

apply plugin: 'com.android.application'

apply plugin: 'com.google.gms.google-services'

You need to perform some additional steps to configure Firebase for iOS. Follow this documentation to set it up.

We should install the @react-native-firebase/app package in our app to complete the set up for Firebase.

npm install @react-native-firebase/app

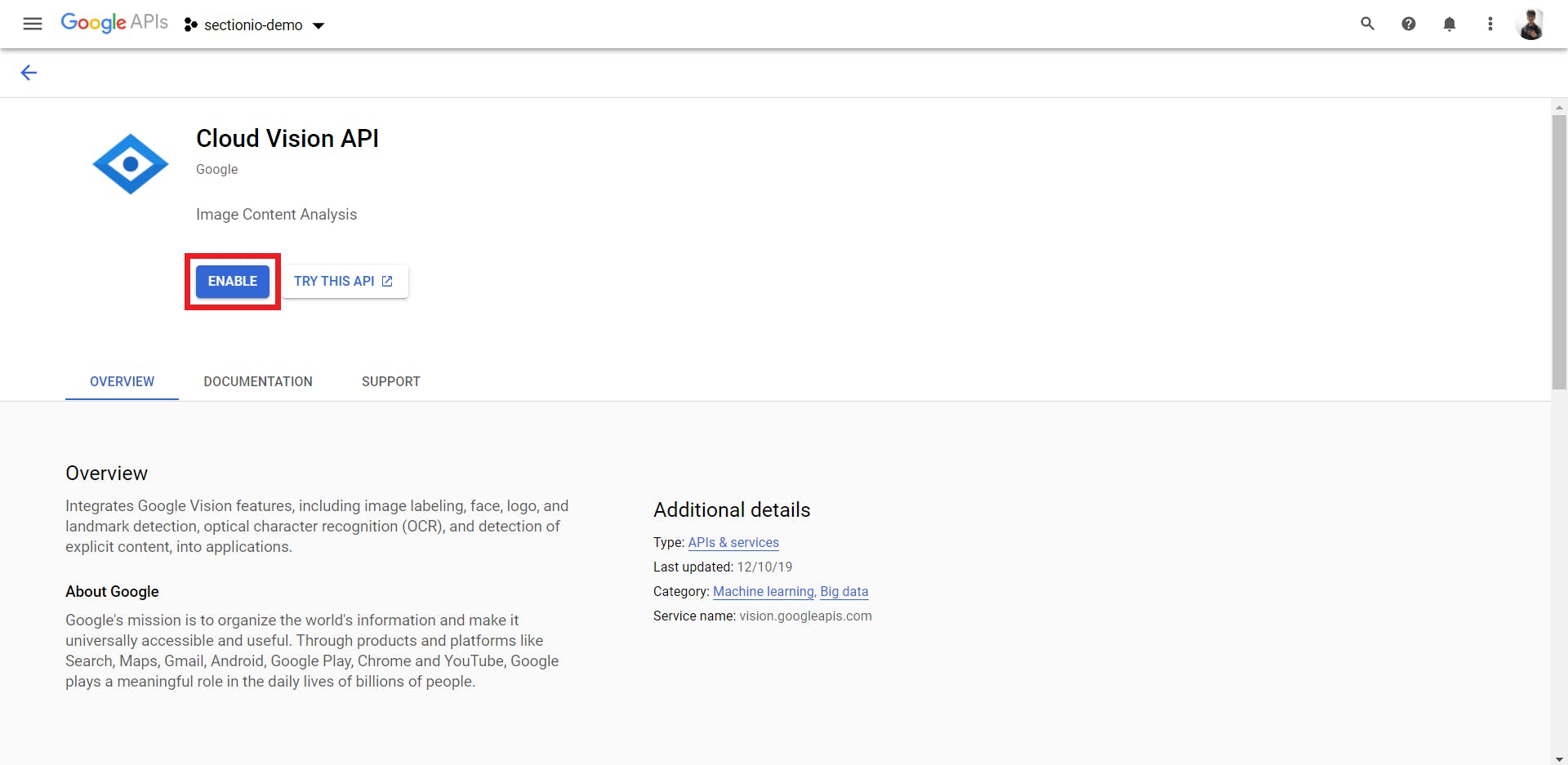

Setting up Cloud Vision API

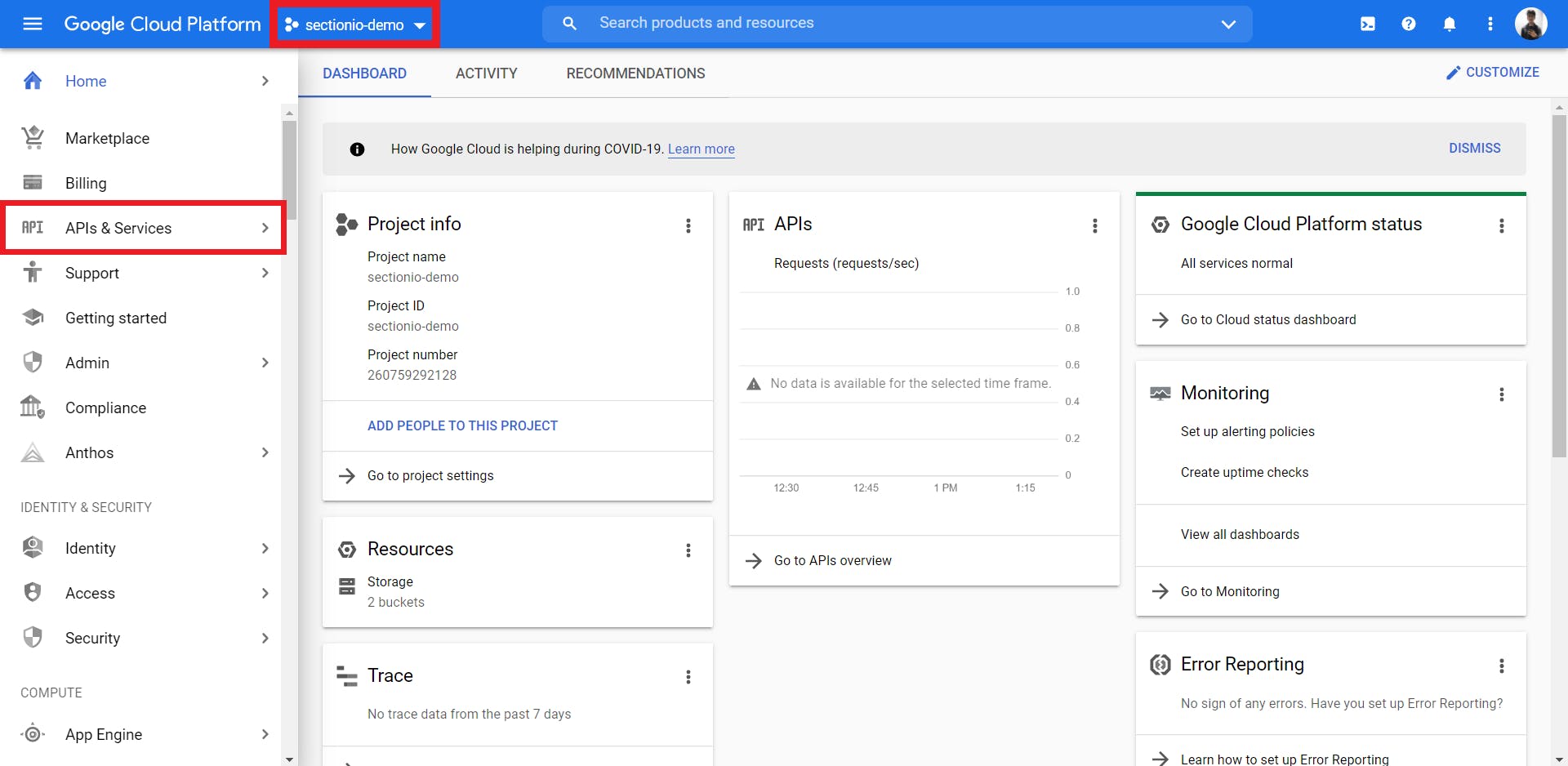

Head to Google Cloud Console and select the Google project you are working on. Go to the API & Services tab.

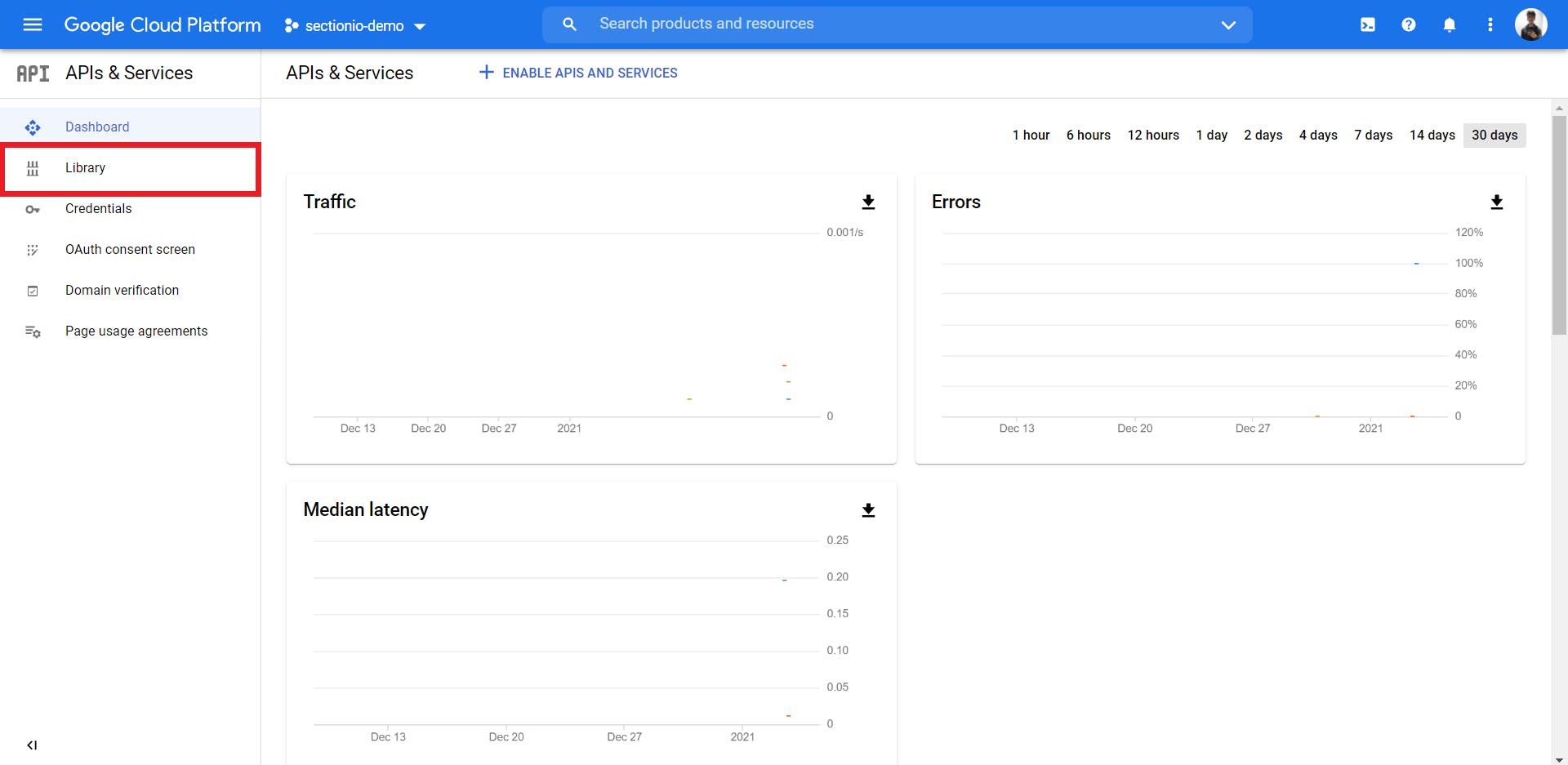

In the API & Service tab, navigate to the Libraries section.

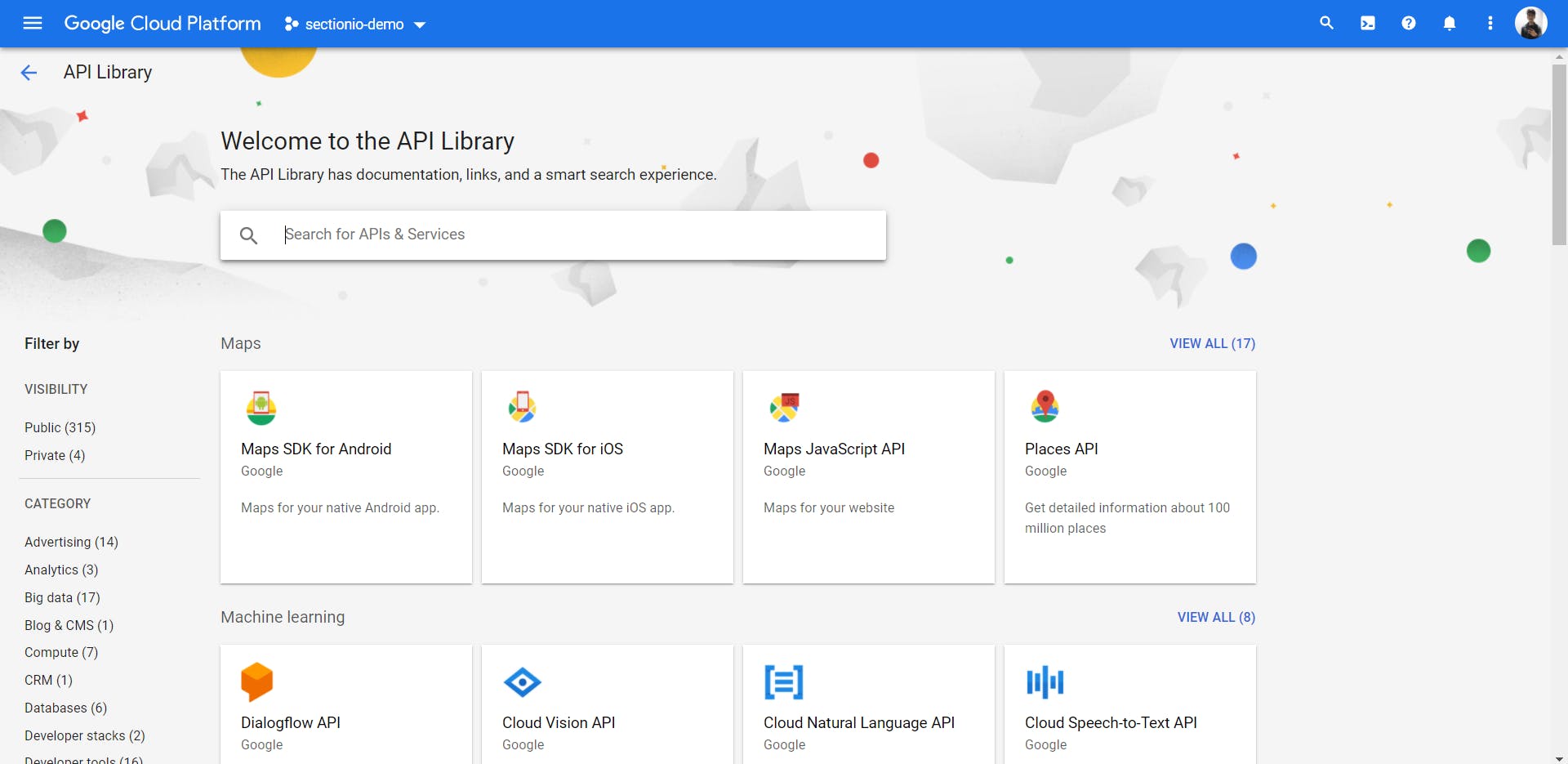

Search for Cloud Vision API.

Once you open the API page, click on the Enable button.

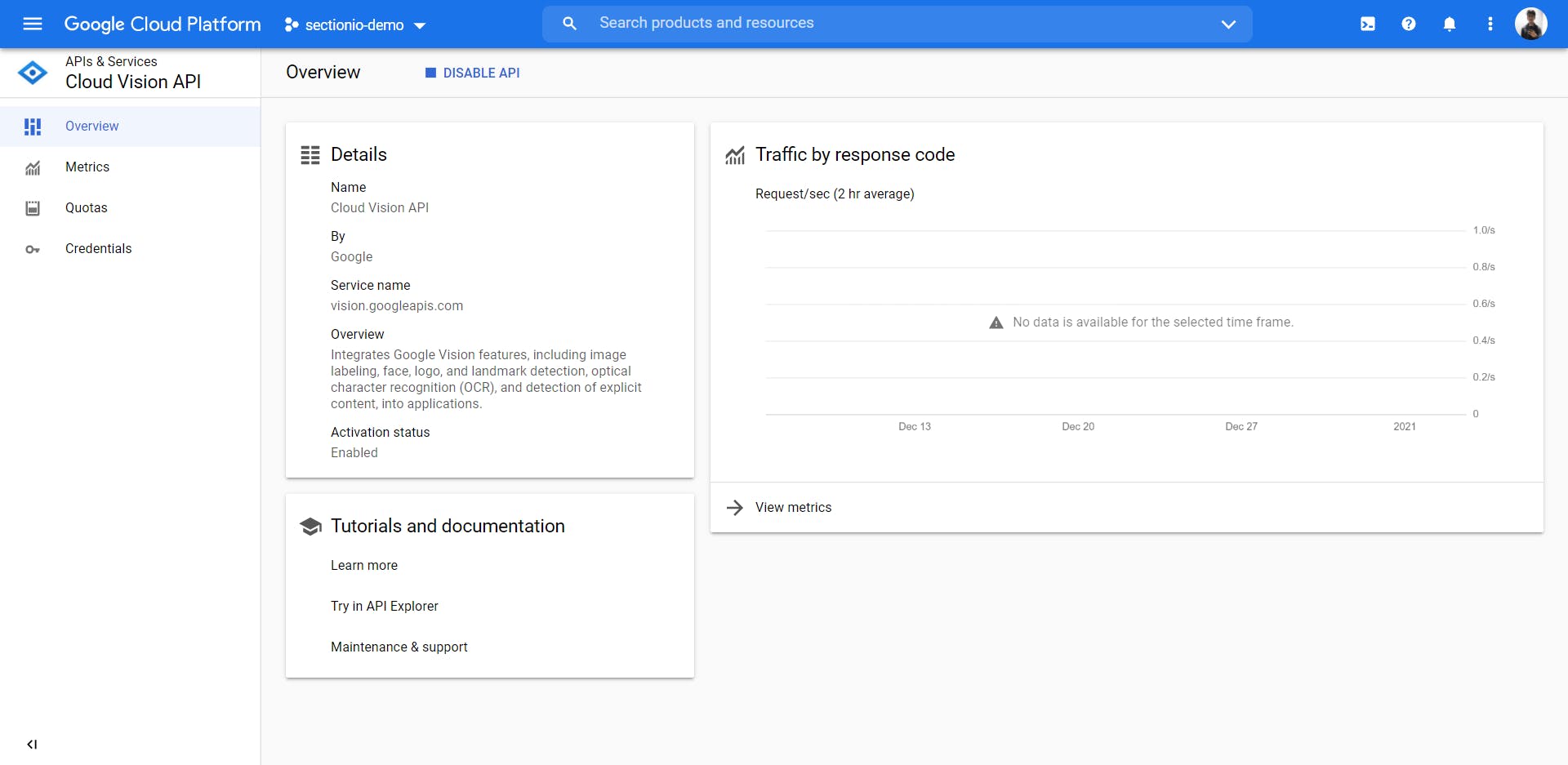

Once you've enabled the API, you'll see the Cloud Vision API Overview page.

With this, you have successfully set up the Cloud Vision API for your Firebase project. This will enable us to use the ML Kit for landmark recognition.

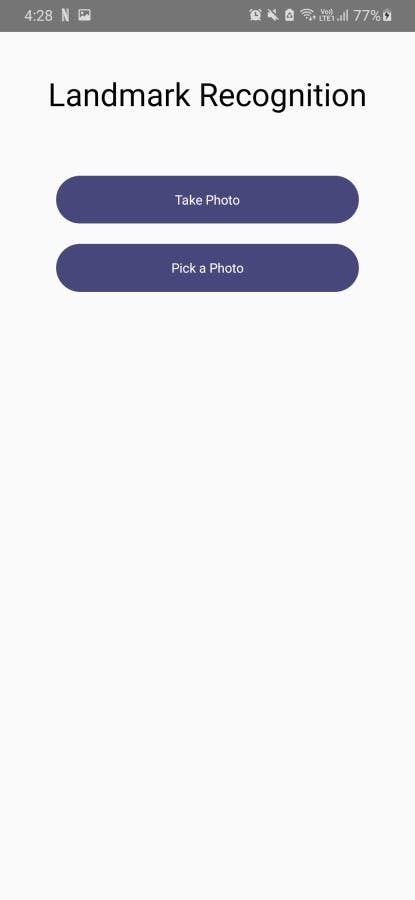

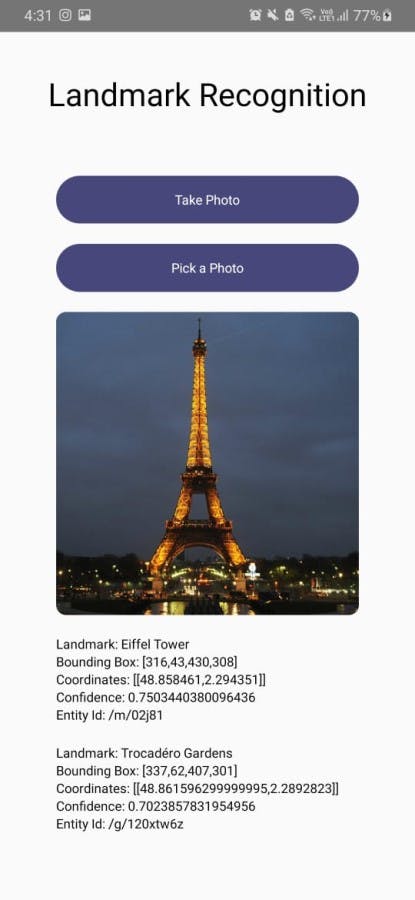

Building the UI

We'll be writing all of our code in the App.js file.

Let's add two buttons to the screen to take a photo and pick an image.

import { StyleSheet, Text, ScrollView, View, TouchableOpacity } from 'react-native';

export default function App() {

return (

<ScrollView contentContainerStyle={styles.screen}>

<Text style={styles.title}>Landmark Recognition</Text>

<View>

<TouchableOpacity style={styles.button}>

<Text style={styles.buttonText}>Take Photo</Text>

</TouchableOpacity>

<TouchableOpacity style={styles.button}>

<Text style={styles.buttonText}>Pick a Photo</Text>

</TouchableOpacity>

</View>

</ScrollView>

);

}

Styles:

const styles = StyleSheet.create({

screen: {

flex: 1,

alignItems: 'center',

},

title: {

fontSize: 35,

marginVertical: 40,

},

button: {

backgroundColor: '#47477b',

color: '#fff',

justifyContent: 'center',

alignItems: 'center',

paddingVertical: 15,

paddingHorizontal: 40,

borderRadius: 50,

marginTop: 20,

},

buttonText: {

color: '#fff',

},

});

Adding media picker

Let's install the react-native-image-picker to add these functionalities.

npm install react-native-image-picker

The minimum target SDK for the React Native Image Picker is 21. If your project targets an SDK below 21, bump up the minSDK target in

android/build.gradle.

After the package is installed, import the launchCamera and launchImageLibrary functions from the package.

import { launchCamera, launchImageLibrary } from 'react-native-image-picker';

Both functions accept two arguments. The first argument is options for the camera or the gallery, and the second argument is a callback function. This callback function is called when the user picks an image or cancels the operation.

Check out the API Reference for more details about these functions.

Now let's add 2 functions, one for each button.

const onTakePhoto = () => launchCamera({ mediaType: 'image' }, onImageSelect);

const onSelectImagePress = () => launchImageLibrary({ mediaType: 'image' }, onImageSelect);

Let's create a function called onImageSelect. This is the callback function that we are passing to the launchCamera and the launchImageLibrary functions. We will get the details of the image that the user picked in this callback function.

We should start the landmark recognition only when the user did not cancel the media picker. If the user canceled the operation, the picker will send a didCancel property in the response object.

const onImageSelect = async (media) => {

if (!media.didCancel) {

// Landmark Recognition Process

}

};

You can learn more about the response object we get from the launchCamera and the launchImageLibrary functions here.

Now, pass these functions to the onPress prop of the TouchableOpacity for the respective buttons.

<View>

<TouchableOpacity style={styles.button} onPress={onTakePhoto}>

<Text style={styles.buttonText}>Take Photo</Text>

</TouchableOpacity>

<TouchableOpacity style={styles.button} onPress={onSelectImagePress}>

<Text style={styles.buttonText}>Pick a Photo</Text>

</TouchableOpacity>

<View>

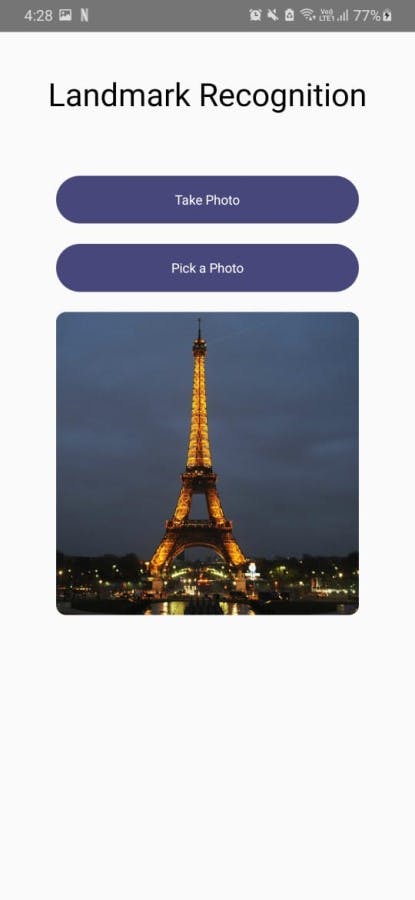

Let's create a state to display the selected image on the UI.

import { useState } from 'react';

const [image, setImage] = useState();

Now, let's add an Image component below the buttons to display the selected image.

<View>

<TouchableOpacity style={styles.button} onPress={onTakePhoto}>

<Text style={styles.buttonText}>Take Photo</Text>

</TouchableOpacity>

<TouchableOpacity style={styles.button} onPress={onSelectImagePress}>

<Text style={styles.buttonText}>Pick a Photo</Text>

</TouchableOpacity>

<Image source={{ uri: image }} style={styles.image} />

</View>

Styles for the Image:

image: {

height: 300,

width: 300,

marginTop: 20,

borderRadius: 10,

},

If the user did not cancel the operation, we will set the image state with the URI of the selected image in the onImageSelect function.

const onImageSelect = async (media) => {

if (!media.didCancel) {

setImage(media.uri);

}

};

Recognize Landmarks from Images

Let's install the package for Firebase ML.

npm install @react-native-firebase/ml

Once the package is installed, let's import the package.

import ml from '@react-native-firebase/ml';

We should use the cloudLandmarkRecognizerProcessImage method in the ml package to process the image and get the landmarks in the image.

We will pass the URI of the selected image to this function.

const landmarks = await ml().cloudLandmarkRecognizerProcessImage(media.uri);

The function will process the image and return the list of landmarks that are identified in the image along with:

The 4-point coordinates of the landmarks on the image.

Latitude & Longitude of the landmarks.

The confidence the Machine Learning service has in its results.

An entity ID for use on Google's Knowledge Graph Search API.

Let's set up a state to store the results and render them in the UI. Since the result will be an array of landmarks, let's set the initial state to an empty array.

const [landmarks, setLandmarks] = useState([]);

Let's set the state to the response of the cloudLandmarkRecognizerProcessImage function.

const onImageSelect = async (media) => {

if (!media.didCancel) {

setImage(media.uri);

const landmarks = await ml().cloudLandmarkRecognizerProcessImage(

media.uri,

);

setLandmarks(landmarks);

}

};

We'll use this state to render the details in the UI.

{landmarks.map((item, i) => (

<View style={{ marginTop: 20, width: 300 }} key={i}>

<Text>LandMark: {item.landmark}</Text>

<Text>BoundingBox: {JSON.stringify(item.boundingBox)}</Text>

<Text>Coordinates: {JSON.stringify(item.locations)}</Text>

<Text>Confidence: {item.confidence}</Text>

<Text>Confidence: {item.entityId}</Text>

</View>

))}

Additional Configurations

The cloudLandmarkRecognizerProcessImage method accepts an optional configuration object.

maxResults: Sets the maximum number of results of this type.

modelType: Sets model type for the detection. By default, the function will use the

STABLE_MODEL. However, if you feel that the results are not up-to-date, you can optionally use theLATEST_MODEL.apiKeyOverride: API key to use for ML API. If not set, the default API key from

firebase.app()will be used.enforceCertFingerprintMatch: Only allow registered application instances with matching certificate fingerprints to use ML API.

Example:

import ml, { MLCloudLandmarkRecognizerModelType } from '@react-native-firebase/ml';

await ml().cloudImageLabelerProcessImage(imagePath, {

maxResults: 2, // undefined | number

modelType: MLCloudLandmarkRecognizerModelType.LATEST_MODEL, // LATEST_MODEL | STABLE_MODEL

apiKeyOverride: "<-- API KEY -->", // undefined | string,

enforceCertFingerprintMatch: true, // undefined | false | true,

});

Let's Recap

We set up our development environment and created a React Native app.

We created a Firebase project.

We set up the Cloud Vision API to use the Landmark Recognizer in the Firebase ML Kit.

We built a simple UI for the app.

We added the

react-native-image-pickerpackage to pick images using the gallery or capture images using the camera.We installed the Firebase ML package.

We used the

cloudLandmarkRecognizerProcessImagefunction in themlpackage to recognize the landmarks in the images.We displayed the results in the UI.

We learned about the additional configurations that we can pass to the

cloudLandmarkRecognizerProcessImagefunction.

Congratulations, :partying_face: You did it.

Happy Coding!

I do all my writing in my spare time, so if you feel inclined, a tip is always incredibly appreciated.